Growth Newsletter #289

At this point, you've probably heard about AI UGC-style video ads. And if you're like me, you've approached this topic skeptically.

Six months ago, AI video capabilities weren't exactly nailing human speech. We humans are particularly good at spotting when something isn't quite right with a "person" speaking to camera.

But something's changed in the last 2-3 months. All of a sudden, I'm struggling to distinguish AI videos from real ones.

That made us think it's time to dig into what's actually happening with AI UGC. So we spent time with Romain Torres, CEO of Arcads, one of the market-leading AI UGC platforms with around 10,000 customers.

This newsletter walks through our firsthand experience creating AI UGC ads: what we learned, what works, and what you need to know before diving in.

We were genuinely surprised with the results.

— Kevin & Gil

This week's tactics

How to Make Quality “UGC” Ads Using Only AI

Insight from Kevin DePopas (Demand Curve Chief Growth Officer) and Gil Templeton (Demand Curve Staff Writer). This newsletter is part of a paid partnership with Arcads.

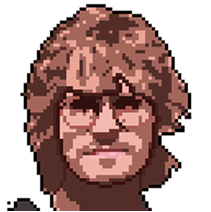

First, if you're still skeptical about the quality of AI UGC, watch these two videos made with Arcads. Can you tell these aren't real people?

Everything from the camera shake to the mouth syncing to the AI audio is insanely good.

Which, for me, naturally raises the question: is this ethical?

During our meeting with Romain at Arcads, I pressed him pretty hard on this. His response challenged my perspective. He asked, “Is Photoshop unethical?” In other words, where’s the line?

Is it ethical for McDonald’s to painstakingly create the perfect-looking burger at a photoshoot (carefully applying ketchup with a syringe), then Photoshop that image to use on marketing materials, all to sell you a compressed, depressed burger in real life?

Is it ethical for George Clooney to be the face of Nespresso when I’d bet he enjoyed a different type of coffee when he woke up this morning?

Is it ethical for a pizza ad to mix glue in with their cheese to create the illusion of the perfect “cheese pull” when an actor pulls a slice out?

Is it ethical for a paid influencer to gush about how life-changing a product is when they don’t really feel that way in their heart of hearts?

Romain made the point that consumers aren’t easily fooled. They know advertising is “packaging” and they frequently suspend their disbelief, but what they want is to be entertained, and understand the ethos of what they are buying.

The regulatory reality: Yes, the FTC and “Truth in Advertising” laws still exist in the AI era. Therefore, you (and your AI avatars) can’t create misleading testimonies like “I used this product and it helped me do X, Y, and Z,” if it’s not true.

Therefore, much like Photoshopping a burger or a bikini-clad model, the responsibility to wield this tool ethically lies with the advertiser.

On its face, AI UGC isn’t inherently more deceptive than the tactics we’ve used for decades. (After all, most TV ads are scripted and delivered by paid actors.)

The key is transparency where it’s required and following the same FTC guidelines that govern all advertising (at least in the US.)

Common Misconceptions About AI UGC

Let’s take a look at some of the common hang-ups and misunderstandings people have around this type of approach.

Misconception #1: The False Negative Effect

This is the biggest barrier to full adoption, and it has more to do with impatience and unrealistic expectations than the videos themselves.

Marketers might create a couple AI UGC ads, test them for a few days, see no tangible results, and come to the conclusion that “It didn’t work.”

Romain was all too aware of this roadblock. He said you usually need to test 10–20 ads minimum to unlock a real winner. The best performance-marketing brands test hundreds or thousands of videos in a month, helping them drive results in competitive channels.

Arcads’ client Learna didn’t hit $2M monthly revenue because their first AI ad went gangbusters. Since starting with AI UGC, they’ve literally tested 10,000+ variations. This insight isn’t unique to AI, it’s just good testing discipline. But people mistakenly think AI is a magic bullet that works instantly.

Romain’s advice: If you’re not willing to create and run 50–100 ad variations over three months, AI UGC probably isn’t for you yet.

Misconception #2: AI Eliminates the Work

As a creative by nature, I know this one all too well. People tend to assume AI is a magic “make it” button, which it’s not…at least if you want to make something decent.

In this case, AI requires quality input from you, then often requires additional steps for a thoughtful assembly.

Here are the types of shots you can create purely inside of tools like Arcads:

- Talking head videos

- Gesture clips (celebrating, embracing, etc.)

- “Show your product” scenes

- B-roll elements

After generating these assets, you’ll still need to stitch them together and finesse further using editing tools like CapCut or After Effects.

Romain estimates that his platform takes production from 3 weeks to 3 hours (but not 3 minutes), so video editing remains a bit of a bottleneck.

A good analogy for this is writing content with AI. Sure, you can use it to create better content faster. But you can also lean on it so hard, you end up making low-quality slop. Think of this as a tool that amplifies your capabilities, speed, and production budget, but it doesn’t replace your creative process.

Misconception #3: AI UGC Doesn’t Look Real or Convey Emotion

This was my initial skepticism, but here’s something to consider as a counterpoint:

People cry watching cartoons. People laugh at memes. Neither of these emotions requires a video of a real person. Last night, I was absolutely cracking up at an unhinged AI-generated video in my TikTok feed.

But even setting that aside, AI videos are certainly reaching the realism threshold and getting better every day.

Romain shared an example to this point. Olivia Moore (who’s a partner at tech-forward investment firm a16z) created a TikTok account featuring an AI character who was rushing sororities at the University of Alabama. Young viewers provided emotional support to the AI character who was crying in her second-ever video that has 177k+ views.

Regardless of whether people see these AI-generated characters as real or not (this gap seems to be getting smaller and less important to people at the same time), these avatars are capable of evoking emotion.

That’s why the last remaining barrier is good storytelling; you need to create the stories and intrigue around these people to drive emotion.

What You Need to Succeed With AI UGC Ads

Before we get under the hood and walk through our tutorial, here are Romain’s recommendations for who this platform makes the most sense for.

- Ad spend: Around $10k+ per month helps justify the platform investment (though Romain has seen people test at lower budgets)

- Testing discipline: Systematic creative testing process already in place

- Time commitment: 3–4 months of consistent testing, not just a one-week experiment

- Skills: Basic video editing (CapCut or similar)

- Mindset: Comfortable creating a higher volume of ads to test (think dozens of ad variations monthly, not just 2-3)

If you don’t meet all of these criteria, it doesn’t mean AI UGC categorically won’t work for your business. We’re just cautioning that you need budget, you need to be rigorous about testing, and you need to commit for a reasonable period of time to unlock success with AI UGC.

Our Live Case Study: AI UGC Ads for the Growth Program 2.0

Let’s walk you through our process for using Arcads to generate video ads promoting the Demand Curve Growth Program 2.0.

Step 1: Research & Hypothesis Formation

This is the part where we poke and prod around, taking stock of what’s working for competitors or good analogs in other categories.

- Foreplay - Shows top-performing ad assets by niche

- Meta Ads Library - Search competitors and educational products to see what they’re running long-term (if an ad runs for 3+ months, it’s a sign that it’s probably working)

We also looked at what Arcads’ customers in education/newsletters are doing. Their clients in the micro-learning vertical have found success using his platform.

Our three hypotheses for Growth Program ads:

- Problem-Agitation Angle: “Stuck testing random tactics without a system? Here’s how 3,000+ founders built growth engines…” (Targeting the scattered founder)

- Transformation Story: “We went from $0 to $XM by following one growth framework…” (Social proof + aspirational)

- Authority/Contrarian: “Most growth advice is garbage. Here’s what actually works…” (Pattern interrupt)

We’re going to roll with the first one (the problem-agitation angle) since that best aligns with our research and strategy.

Step 2: Script Development

Key insight from Romain: Most people underinvest in the script. Without the right input and editing, ChatGPT and Claude spit out mediocre scripts. Good scripts tend to use:

- Simple, conversational language

- Specific customer verbatims (pull from Reddit, customer interviews, TikTok search insights)

- Emotional arc (not monotone)

- Clear hook in first 3 seconds

So whether you use AI to help you write scripts, or you want to write your own from scratch (like the pilgrims used to do) then go for it.

Here’s the prompt I fed ChatGPT to create one for me:

Write a short UGC-style testimonial script for a startup founder talking directly to camera about their experience with The Demand Curve Growth Program.

The tone should be conversational, honest, and natural, like something you’d see on TikTok or YouTube Shorts, not a polished ad.

Structure it in four short, distinct sections.

- The frustration of trying every growth tactic (Facebook ads, SEO, partnerships, cold email, etc.) and failing to get traction.

- The key realization that tactics don’t matter without a system.

- The solution—how the Growth Program helped them build a real, repeatable growth engine.

- A natural, founder-to-founder style CTA encouraging others to check it out, mentioning there’s a waitlist but it’s worth it.

Keep it at or below 600 characters and 30ish seconds of spoken length, use plain English, and make it sound like something a real founder would say in their own words, not overly “salesy.”

And after a little finessing, here’s a solid script we can roll with:

In line with the ethics discussion above, here’s how you could adjust the script to maintain the same punch and relatability, without implying firsthand experience:

“Most founders go through the same phase: trying every growth trick they can find—ads, SEO, partnerships, you name it. And it never really scales.What actually works is having a system. That’s what Demand Curve’s Growth Program teaches: a repeatable way to grow.”

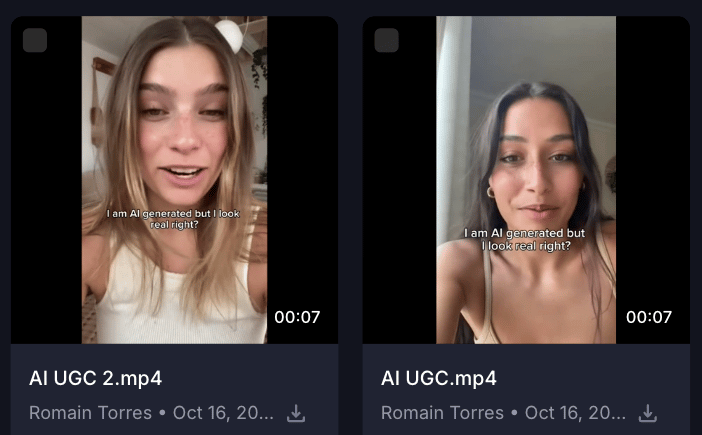

Step 3: Adding Voice and Emotion

Now it’s time to get into the platform and start putting this thing together.

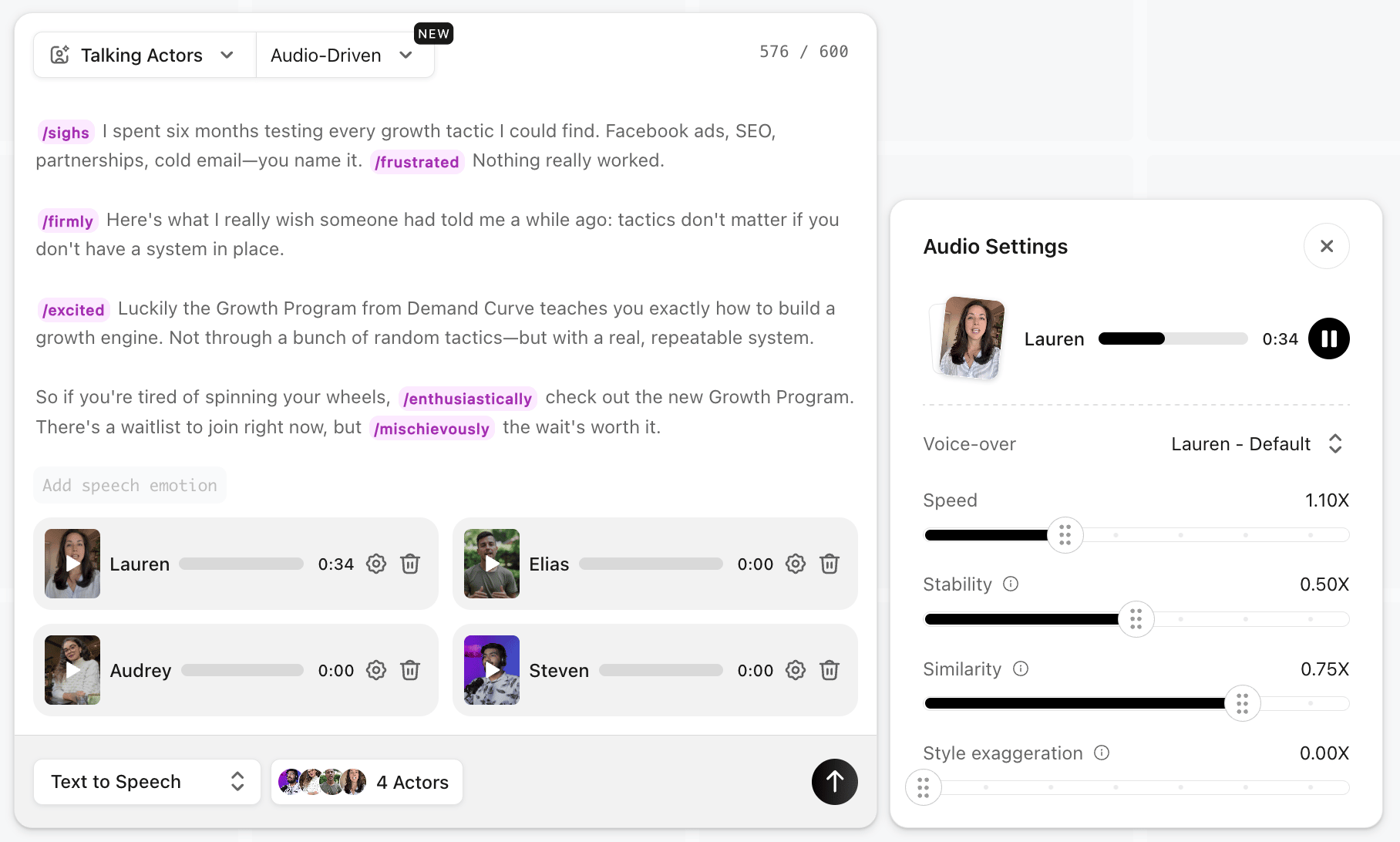

Arcads lets you add emotion tags directly in the script using brackets: [frustrated], [excited], [whisper], [sad]. There’s also an automated button that suggests where to place these tags.

There are two approaches to this:

Option A: Script tagging (what we’ll be using)

- Write script, add emotion tags manually or via AI suggestion

- Select from voice library or generate custom voice from prompt

- Arcads interprets tags and modulates voice accordingly

Option B: Speech-to-speech

- Record yourself reading the script with natural emotion

- Arcads clones the tonality and applies it to the AI avatar

- Best for native language (Romain uses this for French ads)

Pro tip from Romain: If you have a winning UGC ad with a real person, you can upload that audio and use speech-to-speech to replicate the tonality with different AI avatars. This lets you scale a proven winner fairly easily.

Here we’ve uploaded our script, selected our video format (Talking Actors), selected those emotive “audio-driven” avatars, and AI automatically tagged five emotional cues.

I wasn’t expecting to see “mischievously” at the end, but I could also see that working for a snarky little eyebrow raise at our sign-off. We’ll roll with it for now and see what we get.

Step 4: Avatar Selection

Now it’s time to find our formerly frustrated founders. We want to use avatars that resemble our target audience, so this is what we looked for.

Our approach:

- 30-45 years old

- Male and female

- “Professional enough” appearance

- Prioritize avatars with a scroll-stopping presence

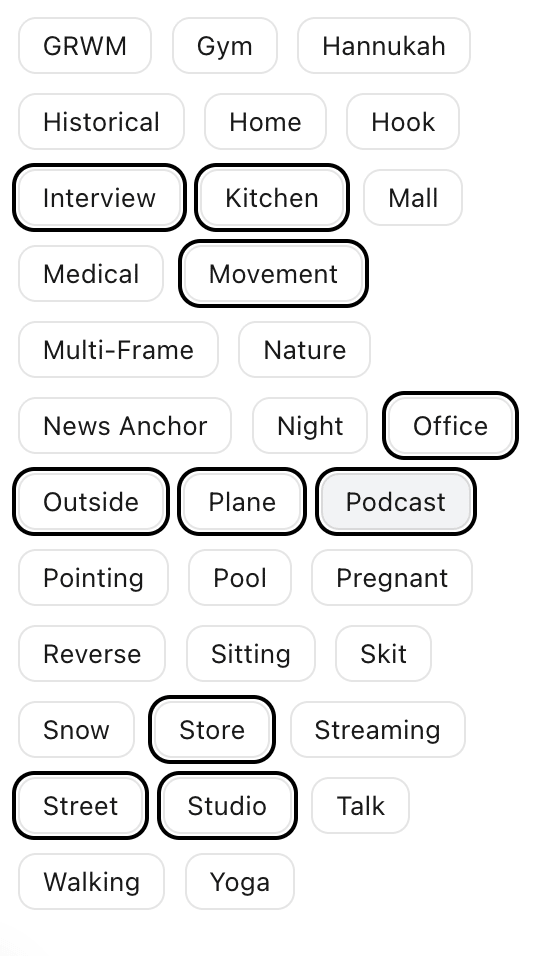

We toggled the appropriate demographic selections for our crowd, and we were also able to pick from a range of situations, backgrounds, occasions, etc. that might fit our founder persona.

Romain’s note on models: Arcads now offers multiple AI models (Talking Actors, Audio-driven, Omni-human). The newer “Audio-driven” and “Omni-human” models generally convey emotion better because they interpret the audio and facial expressions simultaneously. Start with those.

Once we entered our filters, we were met with 200+ avatar options. Hovering over one of them cues their intro video to start, which was a great feature to convey their personality quickly.

From that group, I chose four that fit the bill for our audience and showed a range of situations/environments/production levels: Audrey, Elias, Steven, and Lauren.

After selecting these four avatars, we could preview and adjust their delivery to match what we’re going for, then the platform updated the audio clip shortly after we made our adjustments. We could do this all with our “actors” at the same time, which made it easy to assess.

Step 5: Generate the Assets

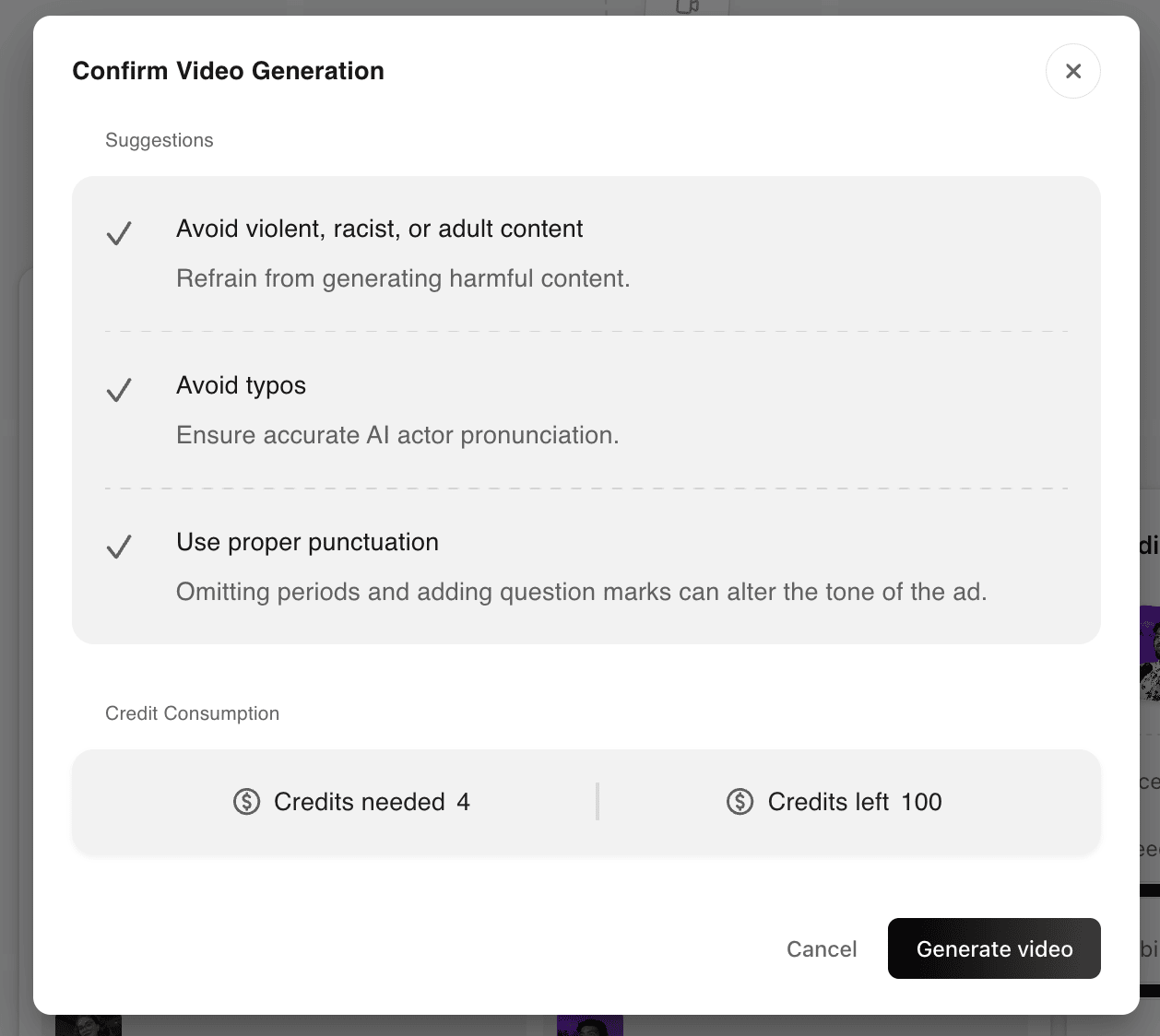

After tweaking the audio outputs, I clicked the black arrow at the bottom, and I was pushed to one final step to generate all four assets at the same time, which took about 10 minutes.

They work on a simple “credits” system where, unlike many AI image/video platforms, it’s very easy to see how many credits you’re using and how many you have left. My four marquee videos cost four credits. Nice and simple.

The results were pretty convincing if I’m being honest. Some were better than others in this first batch (Lauren, shown below, was amazing in particular), but nobody fell into the uncanny valley.

Only someone with a keen eye/ear and a healthy dose of skepticism would ever suspect my video (screenshotted below) was made with AI, especially when a good editor could patch over any hiccups with B-roll clips and keep the energy up.

Unfortunately, this is the part where a static email newsletter can only show you so much about the fun I had and the quality outputs I generated. Check out the full output here.

To me, it seems like the videos that were more casual and had more movement came out better than ones with locked shots, polished production, or straight deliveries.

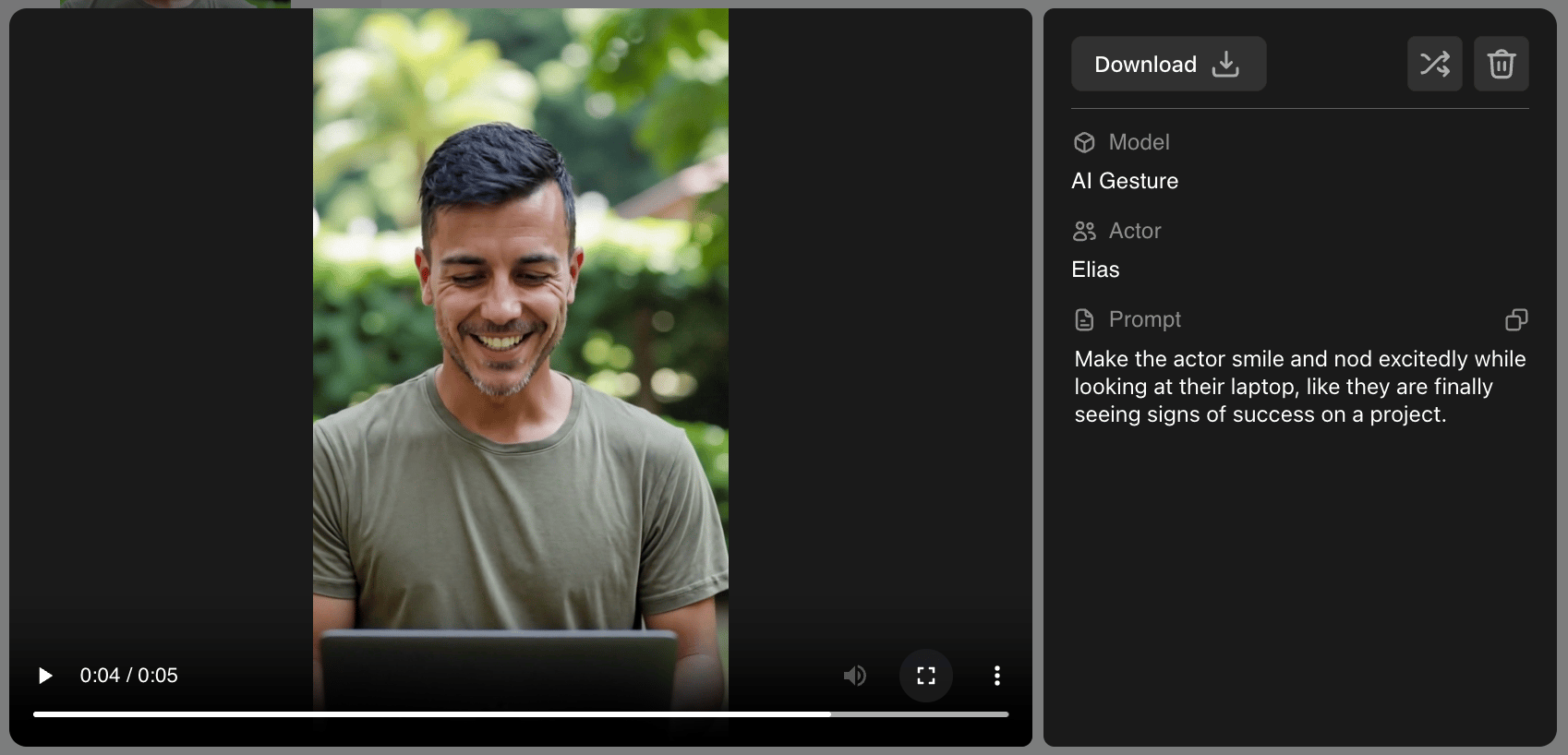

I also easily generated a complementary “gesture” clip where one of our avatars, Elias, opens up a laptop and smiles like he’s seeing some good news on his laptop. Same exact process, I just toggled the model to give an “AI Gesture” and provided a sentence of direction. Since it was less involved, shorter clip, this one only took half of a credit.

These kinds of clips would be useful in the video-editing process where you need a quick cutaway or motion to break up the monotony of a talking head visual.

Also, if you see a moment where the mouth and audio aren’t working in lockstep, or if you just need a Band-Aid over some AI imperfection, these types of shots would be super helpful.

I tried another type of shot, where someone is showing your app or website on their phone. The natural reflection of the window was a nice touch. Although I had trouble finding our four selected actors to deliver this shot.

How We’d Edit From Here

If the team decided to move forward with this approach to promote our Growth Program 2.0, I’d take my talking head videos and B-roll videos, edit them together in CapCut for pacing and naturalness, add captions (critical for sound-off viewing), and maybe consider some other touches like transitions, a light music bed, superimposed text, or branded assets.

Romain from Arcads says younger audiences tend to require a more dynamic, lively edit. While older audiences (50+) often respond best to minimal editing (talking head and captions).

This step is the biggest bottleneck in the process, but it’s key to creating assets that work hard and paint you in a professional light.

Lastly, How Would We Test?

Keep in mind, this is not a one-ad test. This takes volume, patience, and testing. Here’s how we’d test:

- Create 5-10 variations of this same concept (different avatars, slightly different scripts, varying editing styles)

- Test all variations simultaneously on Meta

- Run for minimum 7-14 days (or until out of learning phase, or when one ad set clearly pulls away from another)

- Analyze which elements are working (avatar? script hook? editing style?)

- Double down on the winners, drop the losers

- Repeat weekly, bi-weekly, or monthly (depending on how much you’re spending)

Romain’s process recommendation:

- Week 1-2: Test 20 ads across multiple script angles

- Week 3-4: Create 10 more variations of winning scripts

- Month 2: Iterate based on data

- Month 3: Scale winners, introduce new test concepts

The Takeaway

We’re there. We’re at the point where AI video assets (when given quality input, good judgment, and a strong edit) can pass as realistic, engaging videos made by real people. My experience with Arcads convinced me of that in half an hour.

This technology can be especially useful for startups who are cash-strapped and need to stretch every dollar. But while it can seriously alleviate some production/budgetary burdens, it’s not a magic “make it” button.

It’s a powerful tool that requires discipline and many of the same established growth fundamentals, but it allows you to make better ads faster, when done right.

With great power comes great responsibility, so generate responsibly.

Gil Templeton

Demand Curve Staff Writer

Kevin DePopas

Demand Curve Chief Growth Officer